Pagination is usually overlooked because… not sure why. My best guess is the default system works as is so why bother? or is it because it’s boring?

Here are Google’s official recommendations for pagination; if you’re unfamiliar with them, it’s a great start. Come back after reading those (or use the TL;DR below), as we won’t be covering the basics. https://developers.google.com/search/docs/specialty/ecommerce/pagination-and-incremental-page-loading

TL;DR

- each page needs to have a unique URL (and no fragment identifiers #)

- don’t use a canonical to the first page

- Google no longer uses rel=”next/prev” 🤯

Pagination doesn’t have to be boring

Take, for instance, the ghostblocks pagination: it’s probably the best solution to decrease the depth of a site and makes a lot of sense from a UX perspective.

I strongly suggest reading the full article @Audisto. it was an eye-opener for me and hopefully will make you realize that pagination can be a “fun” brain-teaser.

My SEO-epiphany on pagination

In my in-house SEO career, I’ve been dealing with many large websites (>100k pages to several million), and pagination offers interesting challenges if you want to improve UX and optimize your crawl. I often implemented Ghostblocks as a one-size-fits-all solution with great success until yet another “it depends” revelation.

Over the past years, I’ve been working as an SEO for big publishers (chronologically: Future, DigitalTrends, Dotdash Meredith, ZiffDavis), and by definition, a publisher publishes a lot.

See also: How to fix index bloating issues (slideshare)

While most of the content stays under control thanks to a predictable calendar for publication and updates: product reviews, event coverage, buying guide, etc.

The rest of the website (e.g. the news) feels more like:

In my opinion, the most common mistake is thinking we can have one pagination system for the whole website across all content types. Not all pages are equal, and your pagination should depend on one overlooked criterion: your page lifespan.

Once your page is indexed, how often do you need a page to be found? or re-crawled?

Goal-oriented approach

The two obvious goals for an SEO-optimized pagination are:

- Help the users to browse efficiently through a very large website (e.g. news site, forum)

- Decrease your website depth (i.e. distance in clicks from a starting point, usually the homepage, to other pages – it usually makes sense to have your most important pages within the first levels)

Use-cases and solutions

Below 3 of the most frequent cases + suggested solutions:

1 – An evergreen page that gets frequent updates (e.g. ‘Best Shows on Netflix This Month’) will naturally be pushed again within the first levels every time it’s updated via the homepage and other ‘latest’ modules. It’s also very likely that your high-interest pages will be internally linked prominently throughout your website, maybe even in your top navigation.

Solution: Nothing to do here, yay \o/

(if that’s not the case, pagination is not your main issue)

2 – A page that doesn’t require an update and has a short lifespan has no benefit from being crawled often once indexed. (e.g. News)

Solutions:

- Infinite scroll: it is still possible for the users to browse but less easy for a crawler (if not impossible past a certain point)

- Building an archive section that limits your crawlable paginated content to X days or X posts provides you with better control of the crawling frequency for your older content by “burying” it deeper on the site. You can use a different URL structure (e.g.,/archive/ directory ) + noindex to discourage crawlers from spending too much time there.

3 – An evergreen page that doesn’t require an update (or not often) (e.g. ‘How to clean your oven with baking soda’, or very large website such as a forum)

Solutions:

- Ghostblocks: this is a typical case where that technique is very efficient in keeping content not too many clicks away

A rule of thumb…

I would keep this in mind: The goal is to keep the important pages within the first levels, not ALL pages.

Also, pagination is only one way to achieve that goal. See the caveats below.

Some caveats and tactics not mentioned here for the purpose of the article (i.e. pagination):

- Leverage Internal Linking !!! If a page is important to you, it should be treated as such and ‘promoted’ with internal links so it’s not ‘buried’ too deep regardless of the pagination system e.g. related content, homepage, contextual navigation, etc.

- Search Engines are smart enough not to blindly follow deep pages, even if linked with Ghostblocks. e.g. page-899522 will be less interesting to re-crawl than page-2

- Sitemaps could reflect or not that strategy (your call): making a page harder or impossible to find on your website will make little difference if it appears in your sitemap (from a crawl frequency standpoint)

- Internal Search: at some point, the best user experience to find older content might be your internal search. It is rare to see a website with a performant one and advanced search filters options, it might be something to look at in some cases (e.g. forum)

IRL examples:

NYTimes

NYTimes doesn’t paginate the sections (it uses infinite scroll, so not crawlable but still user-friendly) making it very hard to browse to an old article.

EDIT: they added a pagination since I first wrote tis article but it stops at page 10 (still w/ an infinite scroll)

Techradar

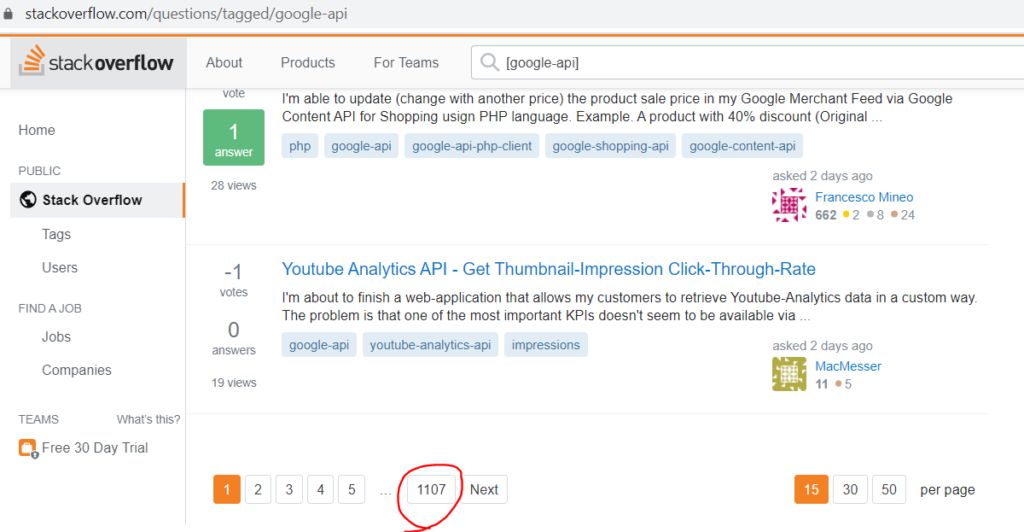

StackOverflow

Read Also:

- https://audisto.com/guides/pagination/

- https://www.jcchouinard.com/pagination-seo/

- https://builtvisible.com/solving-site-architecture-issues/

- https://www.oritmutznik.com/ecommerce-seo/pagination-best-practices-ecommerce-case-study-and-tips-for-search-friendly-pagination

Was this helpful?

2 / 0

SEO/Data Enthusiast

I help international organizations and large-scale websites to grow intent-driven audiences on transactional content and to develop performance-based strategies.

Currently @ZiffDavis – Lifehacker, Mashable, PCMag

ex @DotdashMeredith, @FuturePLC